Cold Storage System

目录

1 Microsoft Pelican Cold Storage System

1.1 Introduction

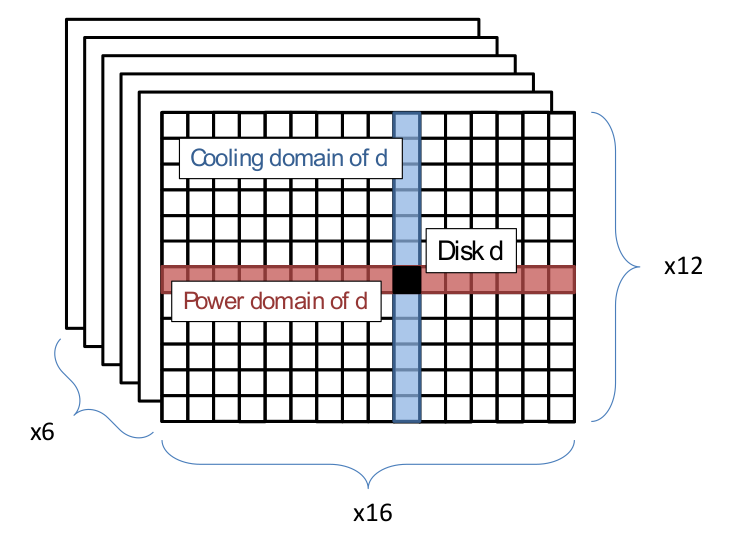

- 52U standard RACK, 1152 HDD;

- 6x8U chassis(JBOD), 12 trays, 192 HDD per chassis;

- RACK Topology: 6x12x16;

- Tray has independent power domain;

- RACK Topology: 6x12x16;

- Throughput 1GBps per PB so retrieve cycle is 13 days;

1.2 Software stack

1.2.1 data layout

- split to a sequence of 128KB data fragments;

- k data fragments generate r additional fragments from EC;

- Refer k+r data fragments as a stripe;

- Store a stripe to k+r disks;

- k=15, r=3, overhead=0.2

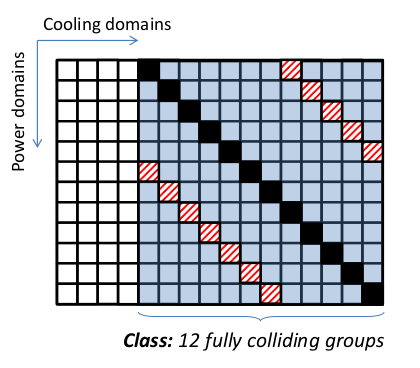

- cooling domain, power group

- io scheduler Reordering, Rate limiting;

- config

- 48 groups, each 24 disks;

- Metrics

- Completion Time

- Time to first byte

- Service Time

- Average reject rate

- Throughput

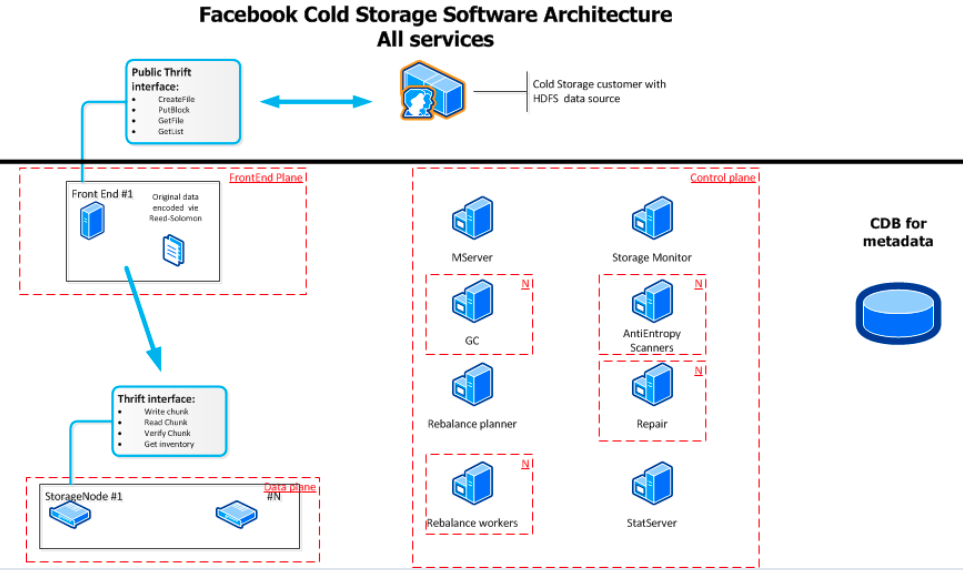

2 Facebook Cold Storage System

- Architecture

- power group, like as microsoft Pelican;

- Diff from Pelican: One node one disk, many node, predefined power cycle;

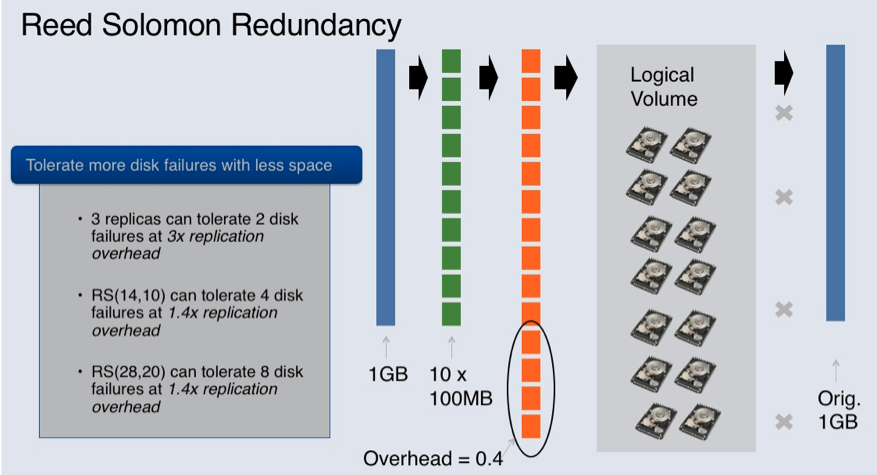

- 10+4, overhead=0.4

- Metadata

3 References

- Facebook cold storage: https://engineering.fb.com/2015/05/04/core-data/under-the-hood-facebook-s-cold-storage-system/

- Freezing Exabytes of Data at Facebook's Cold Storage: https://www.digitalpreservation.gov/meetings/documents/storage14/Kestutis_Patiejunas_Facebook_FreezingExabytesOfDataFacebooksColdStorage.pdf

- CosmosStore

- CosmosStoreManager(CSM) = master

- ExtendNode(EN) = ChunkServer

- CosmosRepairManager(CRM) = Supervisor

- CosmosFrontEnd ~= DFS Proxy

- 客户端压缩,最小读回一个压缩块;

- BatchModify(xxx) // only in a volume;